Equipped with the capability to deliver the sense of “sight” to the user by utilizing sensation, the Enactive Torch (ET) uses a sensor to give the device the power to detect objects at a distance from 30cm to 12m, thus delivering the information to its microcontroller to control its vibration intensity it delivers to the user. The closer an object is, the more intense the vibration becomes. This sensation allows individuals to maneuver and interact with the environment before them without the need for eyesight. The device not only delivers a vibration sensation proportional to the distance it detects an object, the ET also displays its own acceleration, orientation, and the distance of the object it detects (in centimeters) to the computer. The acceleration and distance are displayed in real time through Arduino software, allowing the user of the device to receive constant information on the performance of the device.

The ET will continue to have future iterations so that the model can continue to be optimized for experimental use for the lab. Future updates and iteration plans will allow two ET to communicate to each other so that the user can receive information from two ET simultaneously. Future plans include giving the ET new modes to accommodate new experimental tasks. Different modes will allow an individual on the computer to decide the vibration intensities of the two ET that another individual is holding. Modes like these will help collect valuable information for upcoming trials.

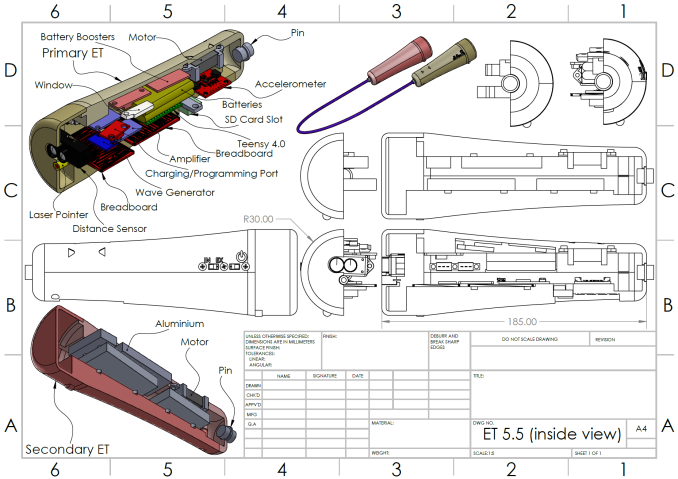

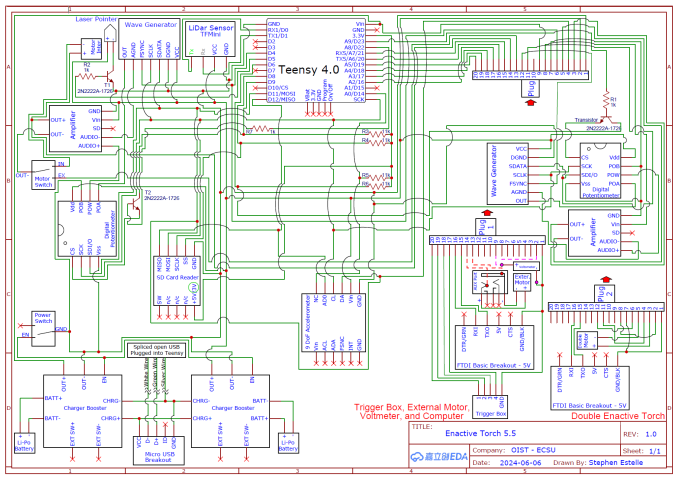

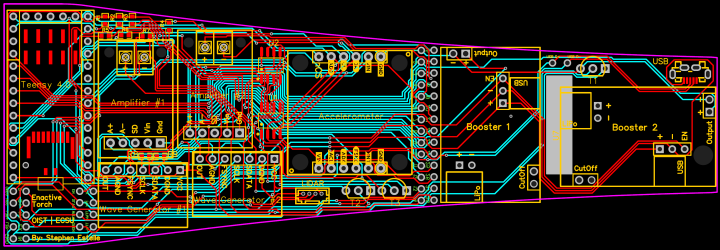

The ET was programed on Arduino software using a Teensy 4.0 as the microcontroller. Currently, the device runs on two 5V power boosters to operate the sensor, motor, accelerometer, wave generator, SD card reader, laser pointer, digital potentiometers, amplifiers, a Linear Resonant Actuator, and a microcontroller all housed inside the casing. The housing was initially designed and assembled on Solidworks and fabricated through 3D printing. The ET was 3D printed in 3201PA-F Nylon using Selective Laser Sintering (SLS) technology.

Relevant Publications

-

Sangati, E., Lobo, L., Estelle, S., Sangati, F., Tavassoli, S., & Froese, T. (2023). Uncovering the Role of Intention in Active and Passive Perception. Proceedings of the Annual Meeting of the Cognitive Science Society, 45: 663 - 670