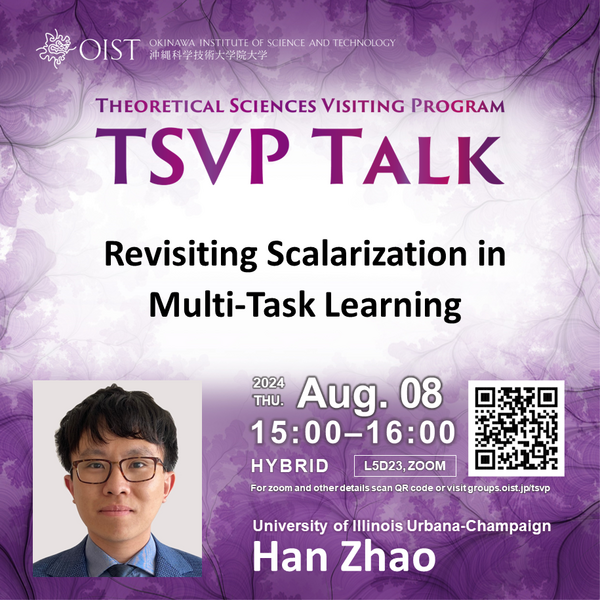

TSVP Talk: "Revisiting Scalarization in Multi-Task Learning" by Han Zhao

Description

Title: Revisiting Scalarization in Multi-Task Learning

Abstract: Multi-task learning (MTL) is a paradigm that allows the model to simultaneously learn from multiple tasks for better generalization. Linear scalarization, i.e., combining all loss functions by a weighted sum, has been the default choice in the literature of MTL since its inception. In recent years, there has been a surge of interest in developing Specialized Multi-Task Optimizers (SMTOs) that treat MTL as a multi-objective optimization problem. However, it remains open whether there is a fundamental advantage of SMTOs over scalarization. In fact, heated debates exist in the community comparing these two types of algorithms, mostly from an empirical perspective. In this talk, I will first give a brief overview of MTL and its recent advances, and then revisit scalarization from a theoretical perspective. Our findings reveal that, in the linear setting, in contrast to recent works that claimed empirical advantages of scalarization, scalarization is inherently incapable of full exploration when neural networks are under-parametrized. I will also discuss two algorithmic techniques to overcome the above limitation and conclude the talk with an open question regarding the nonlinear extension of the above conclusion.

Profile: Dr. Han Zhao is an Assistant Professor of Computer Science and, by courtesy, of Electric and Computer Engineering at the University of Illinois Urbana-Champaign (UIUC). He is also an Amazon Visiting Academic at Amazon AI. Dr. Zhao earned his Ph.D. degree in machine learning from Carnegie Mellon University. His research interest is centered around trustworthy machine learning, with a focus on algorithmic fairness, robust generalization under distribution shifts and model interpretability. He has been named a Kavli Fellow of the National Academy of Sciences and has been selected for the AAAI New Faculty Highlights program. His research has been recognized through a Google Research Scholar Award, an Amazon Research Award, and a Meta Research Award.

Personal website: https://hanzhaoml.github.io/

Google scholar: https://scholar.google.com/citations?user=x942ipYAAAAJ&hl=en

Language: English, no interpretation.

Target audience: General audience / everyone at OIST and beyond.

Freely accessible to all OIST members and guests without registration.

This talk will also be broadcast online via Zoom:

Meeting ID: 919 9148 2123

Passcode: 841647

※ Please note that this event may be recorded and the videos uploaded. In addition, photos may be taken during the event. These are intended for publication online (the OIST website, social media, etc.)※

Add Event to My Calendar

Subscribe to the OIST Calendar

See OIST events in your calendar app