Expectations vs reality: How robots update their behavior based on their experience

Earlier this year, Google Asia Pacific made an unrestricted gift of US$30K to support Professor Jun Tani’s research. The Okinawa Institute of Science and Technology Graduate University (OIST) would like to thank Google Asia Pacific for their kind donation.

Human beings have always questioned what defines us as individuals—what drives our decision making, our perception of the world, and our sense of self. These questions have no hard answers, but they can be explored in multiple different ways. And they’re at the base of Prof. Jun Tani’s research at OIST. It sounds very philosophical, but his work involves neuroscience, computer science, mathematical formulas, and… robots. Prof. Tani leads the Cognitive Neurorobotics Research Unit, with the goal of understanding the principles of embodied cognition and mind. He does this by creating robots that mimic people with the theory that this might help scientists develop an understanding of the underlying neuro-mechanics that define who we are.

Prof. Tani stated that, in everyday life, we often encounter situations that defy our expectations. “My Unit is developing models for robots that can deal with this unpredictability,” he explained. “Our research may help robots to socialize more realistically by learning how to predict each other’s behavior with more certainty and it sheds light on how humans update behavior based on observations. But it could also reveal the cognitive underpinnings of Autism Spectrum Disorder (ASD), as individuals with ASD often prefer to interact with the same environment repetitively to avoid error and unfamiliar social interactions.”

The underlying principle of this work is the free energy principle, proposed by Karl Friston, which says that all life is driven by the need to reduce the difference between expectations and reality. To put it differently, free energy is the difference between the states you expect to be in and the states your sensors tell you that you are in. By minimizing this difference, you minimize surprise. This minimization could occur over thousands of years, through natural selection, or in milliseconds, through individual perception. And it occurs in all living systems—from the tiniest of single celled organisms, to human society.

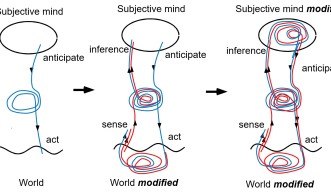

Following on from this, Prof. Tani speculates that what we sense (known as the bottom-up pathway) and what we anticipate (known as the top-down pathway) are in a constant push and pull. When one side pushes harder, the other side pulls back. If what we anticipate is very strong, then we will disregard what we are sensing. But, on the other hand, if what we sense it too strong, then what we anticipate will be modified.

“When the two agree with each other, everything goes smoothly,” Prof. Tani said. “But when conflicts arise between them, the harmony is broken. At this moment, the system is required to minimize the conflicts in terms of the free energy. This effort for minimizing free energy may make the system "conscious" wherein what we anticipate and what we sense from the world can be seen as separable entities in their breakdown.”

To unravel insights into the cognitive basis of the free energy principle, Prof. Tani and his Unit developed an abstract brain model. Called the PV-RNN, the model minimizes the free energy in learning, predicting, and recognizing patterns across space and time. It is similar to models used with various deep learning applications such as Apple’s Siri and Google’s voice search.

The researchers incorporated an additional parameter into their model—w, also known as the meta-prior—and found it had an interesting effect on prediction. With a larger w, the network tended to predict the next sensation (or movement) as a deterministic event, whereas with a smaller w, it did as a non-deterministic event.

The unit has previously looked at how varying the level of w affected how two robots interacted, drew conclusions, and updated their behavior. In these experiments, the two robots were taught different patterns of movement that contained regularity but with some randomness. The researchers found that it was the difference in the value of w that determined whether the robots generated their own patterns, or followed what they observed from the other robot by predicting their counterpart’s movements, or ended up somewhere in between. When w was high, the robot confidently generated its own movements whilst ignoring the other robot. When it was low, it tended to adapt to the other robot, with little intention or the confidence to generate its own patterns. Thus, this additional parameter determines the development of leading and following behavior in social cognitive settings. This implies a possibility that our confidence in the world, including ourselves, might be regulated by certain neurochemicals in our daily lives.

Building on this, the researchers have also worked on human-robot interactions using a humanoid robot implemented with the same model. This allowed Prof Tani and his colleagues to examine both the person’s psychological state and the robot’s “neural state”.

“The results from these simulation experiments and others indicate that PV-RNN can develop the cognitive mechanisms for self-reflection depending on the setting of w,” Prof. Tani concluded. “This model gives robots the metacognitive capability of monitoring their own confidence in predicting sensation at each moment in embodied and social cognitive contexts.”

Specialties

Research Unit

For press enquiries:

Press Inquiry Form